Knowledge Graphs and Retrieval-Augmented Generation: A Guide to Improving RAG Systems

An insight from Sara A. Bejandi

Introduction

In today’s digital world, the efficient networking, management and utilization of knowledge decisive. Knowledge graphs, as structured representations of knowledge in the form of entities and their relationships. These facilitate the understanding and retrieval of complex connections between different entities and concepts. Knowledge graphs can improve Retrieval Augmented Generation (RAG) systems by providing structured data, explainability and access to factual information, which increases accuracy. However, knowledge graphs are not always available, especially in domain-specific applications. It is therefore necessary to create customized knowledge graphs from unstructured data in order to exploit their advantages. This article examines how knowledge graphs can be created from texts. He explains various approaches and technologies that contribute to the improvement of Retrieval Augmented Generation (RAG ) systems.

Knowledge Graphs vs. LLMs

Knowledge graphs and large language models (LLMs) are two different but complementary approaches to processing and using information. While LLMs can analyze and process large amounts of text, knowledge graphs offer a structured representation of knowledge . This structure enables relationships between entities to be clearly defined and complex queries to be answered efficiently. In contrast, LLMs are based on statistical models that generate predictions and answers, but do not always reveal the underlying structure and logic of the information.

Goals of knowledge graph creation

To be able to use knowledge graphs in RAG systems, we must first construct high-quality knowledge graphs from unstructured documents. Once the Knowledge Graph has been created, a natural language query or question can be converted into a Knowledge Graph query to provide precise factual answers. This method enables the efficient extraction and use of knowledge from large volumes of text, which is particularly advantageous in data-intensive areas.

Methods of knowledge graph creation

This section presents recent research on the construction of knowledge graphs from text, with a focus on techniques that utilize large language models (LLMs) and transformers .

Fine-tuning of language models (LLMs) for triple extraction

- Fine-tuning: customization of LLMs to identify precise entities and relationships. This optimization improves the algorithms of the LLMs and their ability to analyze text.

- Zero-shot triple extraction: Use of LLMs without prior training on specific data sets, which is important in the field of artificial intelligence (AI) and machine learning (ML).

- Few-shot triple extraction: Minimal training effort to achieve high accuracy, which increases the efficiency of data processing and AI development.

Zero-shot triple extraction

Zero-shot approaches offer flexibility in triple extraction. Works such as Chat-IE[1] use multi-level frameworks that utilize chat-based prompts to extract entity types and corresponding entities to derive complete triples. Furthermore, the lack of required training data in triple extraction is addressed by approaches that focus on generating training data using zero-shot methods. GenRDK[2] supports triple extraction by guiding ChatGPT through a series of retrieval prompts to incrementally generate long, annotated text data. In addition, RelationPrompt[3 ] reframes the zero-shot problem as synthetic data generation and uses language model prompts to generate synthetic relation patterns, especially for unknown relations.

- Chat-IE[1]: A two-tier framework that uses chat-based prompts to define entity types and relationship lists.

- GenRDK

[2]

: A chain of retrieval prompts that instruct ChatGPT to generate long, annotated text data step by step. - RelationPrompt

[3]

: Creation of relation patterns by language model prompts to train another model for the zero-shot task.

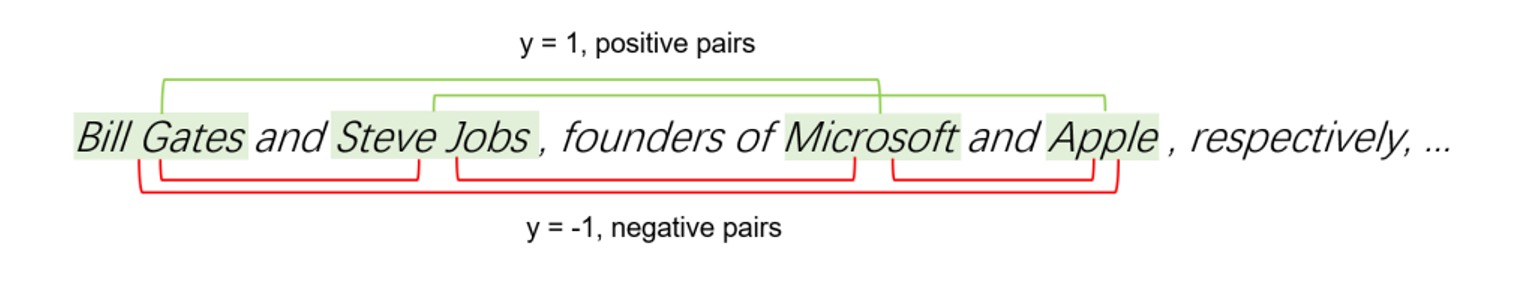

The diagram illustrates the identification of positive and negative pairs in a knowledge graph. Positive pairs (green) represent correct entity-relationship combinations, while negative pairs (red) indicate incorrect assignments.

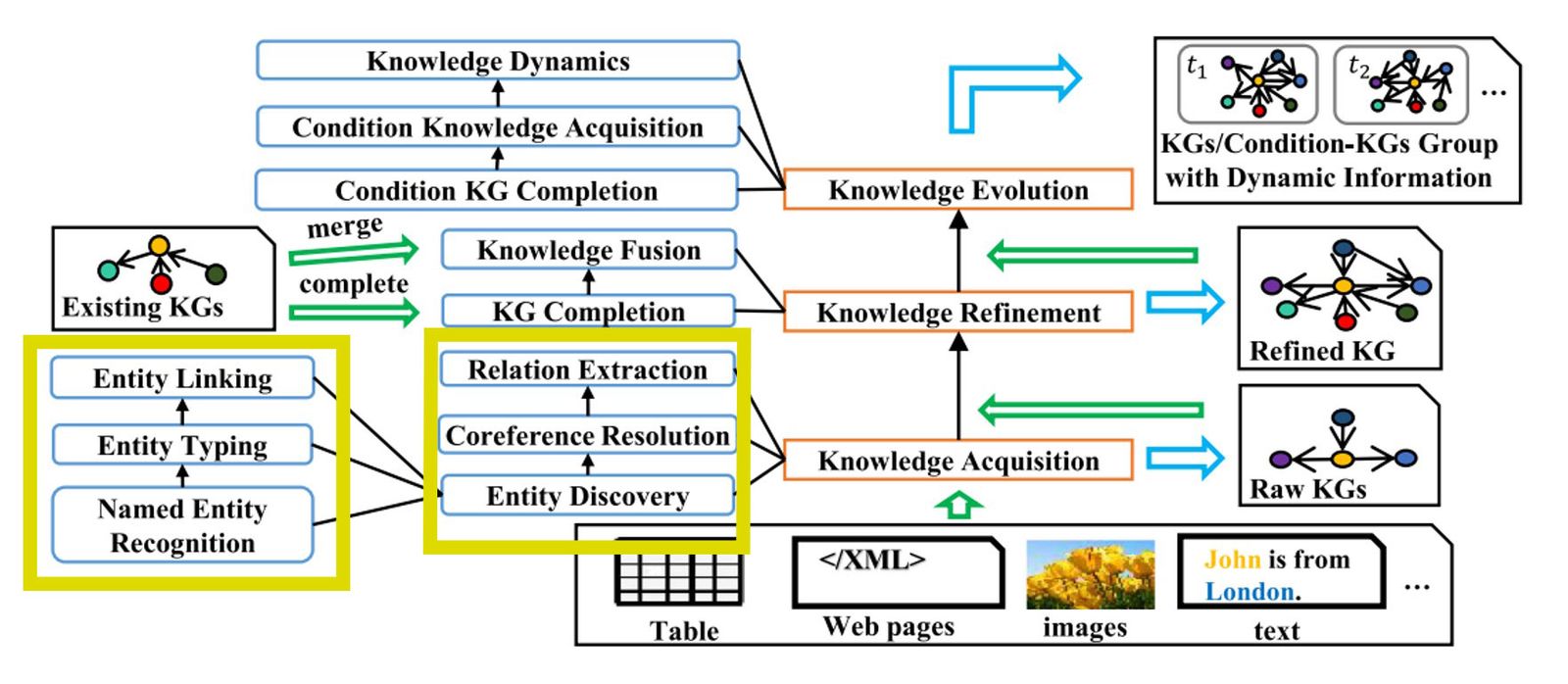

Knowledge Graph Pipeline

The process of constructing a knowledge graph comprises a number of steps, which are shown in the following figure. First, knowledge acquisition creates a raw knowledge graph from unstructured input data. Next, the raw knowledge graph is refined or enriched using existing knowledge graphs. Finally, the knowledge evolution step manages the development of the knowledge graph and dynamic information. In this article, we will only focus on the first step of constructing the Knowledge Graph from unstructured text data. A detailed illustration of the pipeline can be found below:

The Knowledge Graph Pipeline shows the steps for creating Knowledge Graphs from texts, starting with the extraction of entities and relationships through to the final integration into the Knowledge Graph.

End-to-end solutions for knowledge graphs and retrieval augmented generation

There are various end-to-end solutions for creating knowledge graphs. Two notable approaches are Grapher and PiVe, which use different methods and frameworks.

Grapher

Grapher [4] uses a multi-stage process to create knowledge graphs. In the first step, an LLM extracts entities from the text. In the second step, relationships are generated between these entities. This solution is based on the WebNLG+ corpus and treats node and relation generation as a sequence-based problem. Grapher integrates advanced natural language processing techniques to create accurate and comprehensive knowledge graphs. This solution is an example of the optimization of algorithms and the application of machine learning in practice.

PiVe

PiVe [5] (Prompting with Iterative Verification) relies on iterative verification to continuously improve the performance of an LLM. A smaller language model acts as a verification module that checks the output of the LLM and optimizes it step by step using fine-grained correction instructions. PiVe uses data sets such as GenWiki and WebNLG to train the system and increase the accuracy of knowledge graph creation. This shows the importance of programming and continuous improvement in AI development.

Frameworks for knowledge graph creation

In addition to ongoing research into knowledge graph creation with LLMs, frameworks such as Neo4j and LambdaIndex have integrated knowledge graphs into their RAG systems. Some other solutions suggest the creation of knowledge graphs from text.

- Text2Cypher: Converts natural language into Cypher queries.

- LlamaIndex’s KnowledgeQueryEngine: A query framework for knowledge graphs.

- NebulaGraph: A distributed graph database that also offers graph RAG. Such databases are crucial for the efficient storage and retrieval of large amounts of knowledge.

- OntoGPT [6]: A Python package for extracting structured information from text using LLMs.

- Understand: Creates small graphs based on seed concepts in natural language.

Conclusion

The creation of knowledge graphs from texts requires a systematic approach that ranges from entity recognition to relationship generation. Modern methods use advanced language models and optimized frameworks to create precise and efficient knowledge graphs. By integrating verification steps and iterative improvements, these systems can be continuously optimized to deliver accurate and reliable results.

More exciting content on this topic:

Retrieval Augmented Fine-Tuning (RAFT): How language models become smarter with new knowledge

Large Language Model Pricing: A decisive factor in choosing the right LLM

Progress and challenges in fine-tuning large language models

External links:

Llama Index | Fraunhofer (RAG)

References:

1. Wei, Xiang, et al. “Zero-shot information extraction via chatting with chatgpt.” arXiv preprint arXiv:2302.10205 (2023).

2. sun, Qi, et al. “Consistency Guided Knowledge Retrieval and Denoising in LLMs for Zero-shot Document-level Relation Triplet Extraction.” arXiv preprint arXiv:2401.13598 (2024).

3. Chia, Yew Ken, et al. “RelationPrompt: Leveraging prompts to generate synthetic data for zero-shot relation triplet extraction.” arXiv preprint arXiv:2203.09101 (2022).

4 Melnyk, Igor, Pierre Dognin, and Payel Das. “Grapher: Multi-stage knowledge graph construction using pretrained language models.” NeurIPS 2021 Workshop on Deep Generative Models and Downstream Applications. 2021.

5. Han, Jiuzhou, et al. “Pive: Prompting with iterative verification improving graph-based generative capability of llms.” arXiv preprint arXiv:2305.12392 (2023).

6 Caufield JH, Hegde H, Emonet V, Harris NL, Joachimiak MP, Matentzoglu N, et al. Structured prompt interrogation and recursive extraction of semantics (SPIRES): A method for populating knowledge bases using zero-shot learning. Bioinformatics, Volume 40, Issue 3, March 2024, btae104

7 Zhong, Lingfeng, et al. “A comprehensive survey on automatic knowledge graph construction.” ACM Computing Surveys 56.4 (2023): 1-62.

Footnotes:

[1] The image is adapted from [7], where the authors presented a comprehensive survey on automatic knowledge graph construction.