Microsoft VASA-1: Revolution of digital avatars through real-time audio synchronization

Introduction: What is Microsoft VASA-1 and why is it revolutionary?

VASA-1 is a new development from Microsoft Research that aims to generate realistic and expressive talking faces from a single image and audio clip. This technology surpasses previous methods in terms of realism and enables real-time interaction and communication with avatars. In this blog article, we look at how VASA-1 works, its applications and the associated risks and ethical considerations.

The market for talking avatars: Diversity and innovation

The market for technologies and tools that generate talking avatars is broadly diversified and characterized by intense competition. In addition to Microsoft’s VASA-1, there is a variety of other AI (artificial intelligence) software such as SadTalker and VideoReTalking, which also aim to create avatars of realistic digital faces. These models offer different approaches and solutions that vary depending on the application.

VASA-1: The technology explained

VASA-1 uses advanced algorithms to control facial expressions, gaze directions and head movements . These control signals allow VASA-1 to realistically portray a variety of emotions and actions, making it a valuable tool for digital content creation.

VASA-1: A leap forward in audio-controlled avatar technology

VASA-1 distinguishes itself from its predecessors and competitors by its exceptional ability to generate realistic and expressive faces in real time. While other open-source models such as SadTalker and VideoReTalking deliver good results in avatar animation, VASA-1 stands out. It uses advanced AI-driven algorithms that enable seamless integration of facial expressions, gaze directions and head movements.

Illustration of the variety and adaptability of Microsoft VASA-1 technology, which makes it possible to generate different realistic facial expressions from a single image and audio clip. This technology opens up new possibilities for personalized digital interactions.

This is what it looks like in the application >>

The use of VASA-1 in practice

The possible applications of such technologies are manifold. From the entertainment industry to educational platforms, users can benefit from improved and more realistic interaction with digital avatars. An AI chatbot with which you can interact live is therefore no longer a dream of the future. The virtual assistant can already communicate with us via audio and video. At neuland.ai, we also use similar AI technology in AI development to make customer interactions easier.

Responsible AI: risks and ethical considerations

As the development of technologies such as VASA-1 progresses, so do concerns about the ethical implications and risks. From the manipulation of digital content to the need to verify authenticity, the use of such technologies requires responsible action.

Microsoft has not yet published VASA-1 due to concerns about the potential for abuse. They have explicitly emphasized their commitment to ensuring that the technology is secure before it is made publicly available.

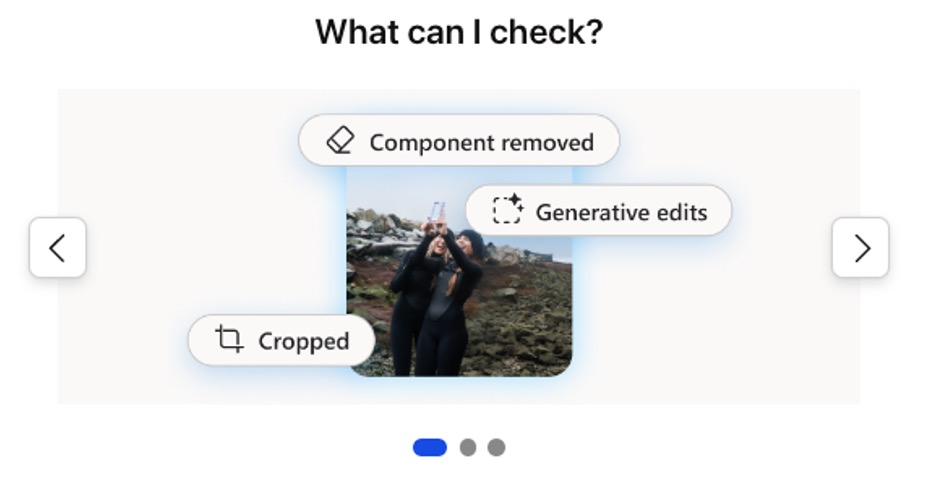

This screenshot shows Microsoft’s Content Integrity Tools, which allow users to check various aspects of digital images, including removed components, generative edits and whether an image has been cropped. These tools are crucial for authenticating content and combating disinformation through digital media.

Microsoft has introduced several measures to ensure security around the use of VASA-1 and to minimize the risk of creating deepfakes. A key aspect of this is the implementation of security architectures in their AI services that aim to prevent misuse. This includes continuous analysis by Red teams, preventive classifiers, the blocking of abusive prompts and automated tests. In addition, there are quick user bans for those who abuse the system.

Another important security approach is the authenticity of the content. Microsoft relies on the integration of content provenance and watermarks in its video, audio and image design products. These features include the addition of metadata or the embedding of signals in the generated content that contain information about the creator, the time of creation and the product used. This helps to distinguish genuine from fake content. Despite these measures, the challenge is that malicious actors may use tools to remove this information. It is therefore important to use other methods such as embedding an invisible watermark and to explore ways of recognizing content even after these signals have been removed.

More similar content from neuland.ai:

Text-to-image generation

|

Fine-tuning of large language models

|

LLMs: A guide through the jungle of AI technologies