Retrieval Augmented Fine-Tuning (RAFT): How language models become smarter with new knowledge

Introduction

Artificial intelligence and, in particular, large language models (LLMs) play a decisive role in today’s information society. They learn from huge amounts of text in order to understand and communicate in a human-like way. But what happens when they come across new information that is not contained in their original training data? This is where the Retrieval Augmented Fine-Tuning (RAFT) approach comes in, which aims to further develop language models and make them “smarter”. This concept is based on the earlier “Retrieval Augmented Generation” (RAG) method. It uses advanced algorithms and aims at model optimization to explain the content comprehensively.

Basics of RAG (Retrieval Augmented Generation)

RAG is a technique in which a language model is used to respond to user questions. To do this, it retrieves relevant information from a large database. You can think of a language model as a virtual assistant that has access to an extensive library of information. When a question is asked, the model searches for relevant information in this library and uses it for the answer. An example of this is a request via “IR”. It could be interpreted as both “Imperial Roman Army” and “Information Retrieval”. However, the model shows weaknesses when it retrieves irrelevant information or does not fully utilize the contextual understanding of the query.

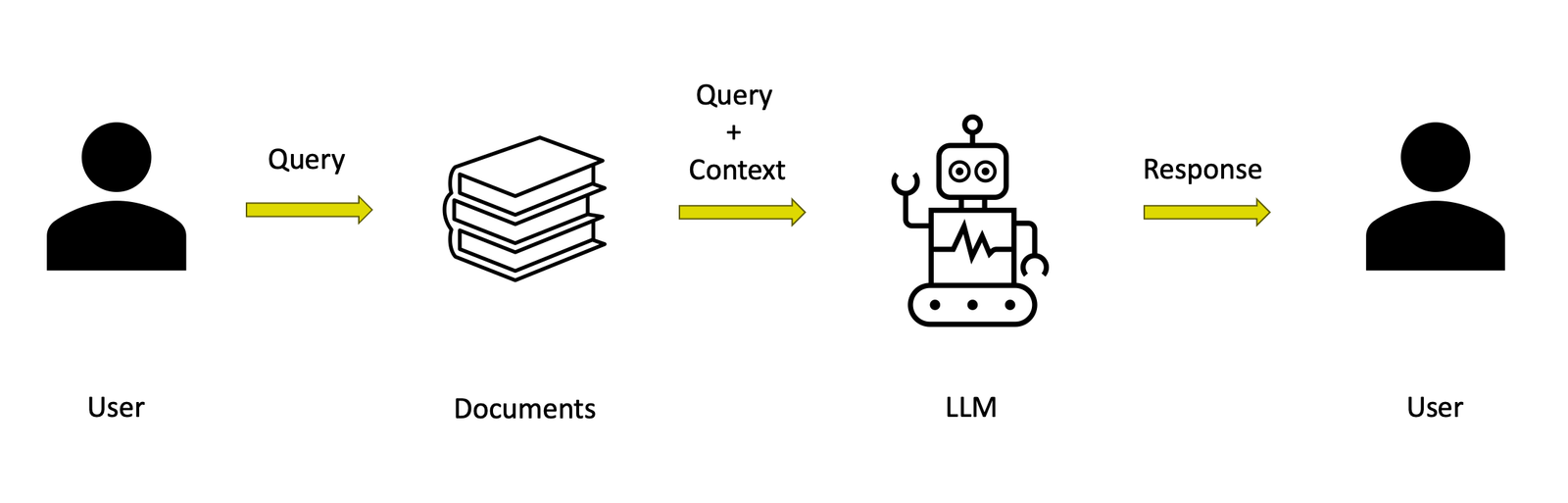

Illustration of the RAG process, showing how a language model responds to complex queries, taking into account different interpretations.

Introduction to RAFT (Retrieval Augmented Fine-Tuning)

RAFT extends RAG by customizing the language model to better handle the retrieved information. It improves the semantic analysis of the retrieved documents, which leads to more precise answers from the model.

The main aim of RAFT is to enable language models to respond more effectively and specifically to the user’s needs. This is achieved by not only training the model on a lot of information in general. Instead, it is trained specifically on the domain or the specifications of the data that are relevant for the user requests.

You can think of it as an advanced training program that helps language models to specialize in certain topics. At the same time, unnecessary information is hidden. This program is particularly useful when the model encounters new, time-critical news or specific expertise from an industry. RAFT helps to integrate this knowledge effectively. It also improves the model’s ability to extract the most useful information from a range of documents. When a question is asked, the model ignores all irrelevant “distraction documents” thanks to RAFT. Instead, it focuses on quoting the exact answer from the relevant texts. This method not only increases the accuracy of the answers, but also the analytical “thinking” of the model.

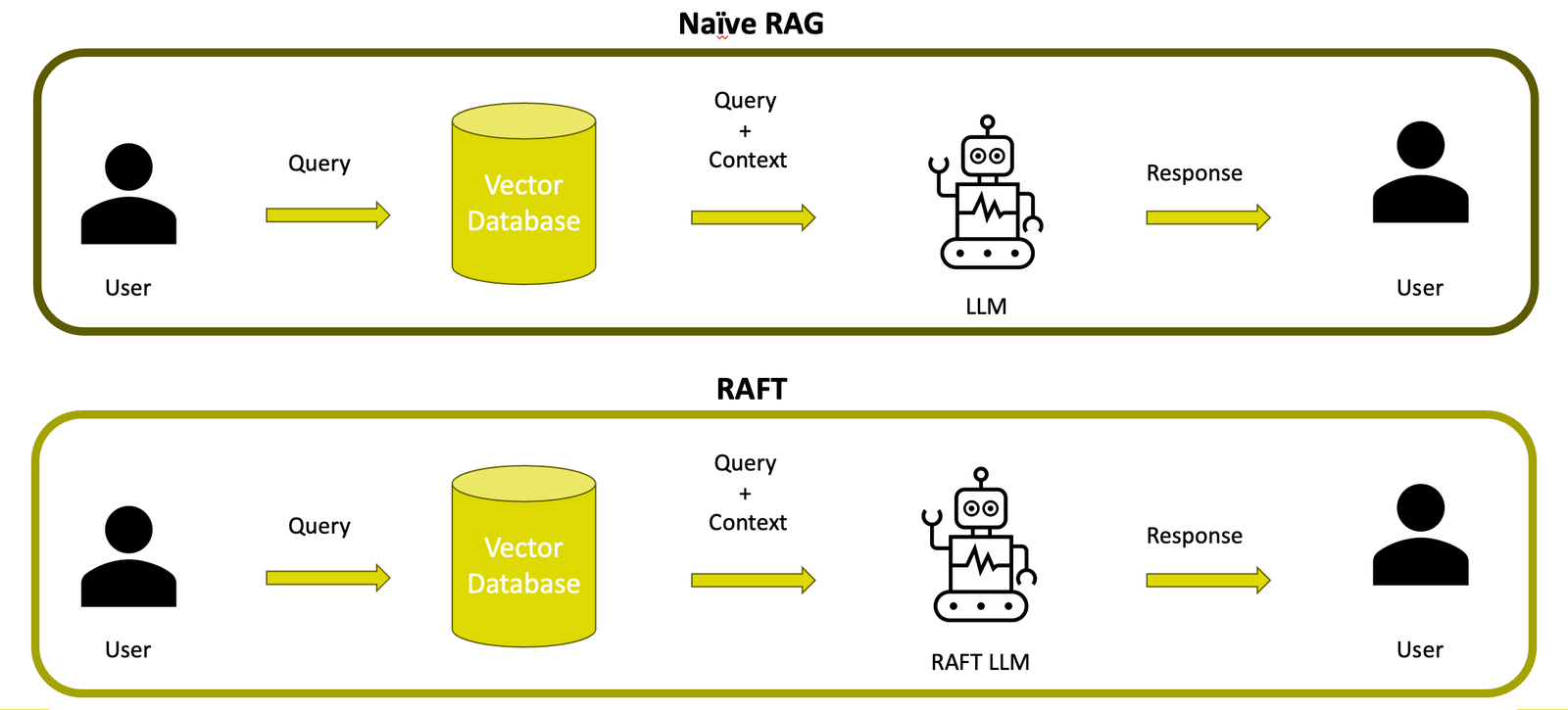

Illustration of the increase in efficiency in information processing and response accuracy through the use of RAFT (Retrieval Augmented Generation) in comparison to Naive RAG.

How does RAFT work?

Fine-tuning and use of specific documents

In RAFT, the LLM is fine-tuned with specific documents. This means that it learns from documents that have been specially selected for the user ‘s context. You can imagine it like a student. Instead of comprehensive learning, learning is focused on specific topics that will appear in an exam. This significantly improves the understanding and response accuracy of the model.

Generation of synthetic data

An interesting aspect of RAFT is the generation of synthetic data. The language model uses real user queries and the corresponding responses to generate artificial (synthetic) generate questions and answers. These are then used to train the model further. This becomes effective by challenging it to respond correctly to new and unfamiliar requests.

Chain of Thought (CoT)

A special method in RAFT is the “Chain of Thought”. Here the model is instructed to explain its thought processes in steps before giving a final answer. This is similar to the way a person thinks through a problem step by step before presenting a solution. This method helps the model to be more logical and transparent in its answers.

Oracle and distractor fragments

To test and improve the quality of the answers, RAFT uses two types of information blocks. On the one hand, “Oracle” fragments, which contain direct and correct answers to a query. On the other hand, “distractor” fragments that contain irrelevant information. These are used to train the model to distinguish relevant from irrelevant information.

Areas of application of RAFT

RAFT is used wherever accuracy and timeliness of information are crucial. It serves as the basis for advanced chatbots that can communicate more effectively thanks to better text generation and improved algorithms.

In the legal field, RAFT can quickly identify relevant laws and precedents. This has the potential to speed up the legal process and increase the accuracy of legal documentation.

In medicine, RAFT supports diagnosis and treatment by providing access to the latest research results and clinical data.

RAFT enables financial analysts to integrate real-time market data and economic reports. This ability is crucial for accurate market forecasts and sound economic analyses.

Opportunities and limitations

The effectiveness of RAFT has already been tested in various areas, including medical research, quiz question databases and more. The results show that RAFT models are better able to provide accurate and relevant information. In addition, RAFT enables the fine tuning of models for specific domains. This customization increases the value of the models for industry-specific applications.

RAFT models adapt to new data by using dynamic information in text processing. This works without having to be completely retrained. This enables continuous updating and scaling of the AI models with minimal adjustments. RAFT represents a significant advance in the development of AI software by enabling greater customization to specific information requirements.

Although RAFT offers many advantages, there are also challenges in its implementation. The quality of the results depends heavily on the quality and variety of the data. Data protection concerns can also arise, especially if sensitive information has to be processed. It is also important to note that RAFT requires a complex infrastructure, which can make it difficult to implement in resource-constrained environments.

Conclusion

In summary, RAFT is an innovative method. It aims to improve language models. This is achieved through targeted training and the use of advanced technologies such as CoT and specific information processing. RAFT enable companies and individuals to develop customized solutions. This means they are tailored to your specific needs. RAFT represents a significant advance in the development of AI models. It pushes the boundaries of what is continuously possible with artificial intelligence.