Insights

16 Oct 2025

Shadow AI - The Underestimated Danger in Corporate Everyday Life

Generative AI is booming – but often hidden

AI tools like ChatGPT or Gemini have gained millions of users in a very short time. In companies, a phenomenon is emerging that experts refer to as "Shadow AI": unauthorized, uncontrolled use of AI tools, often without the knowledge or approval of the IT department.

The dimension in numbers:

1.8 billion monthly accesses to GenAI systems

500–600 million daily users worldwide

20–24 million in Germany access at least once a month

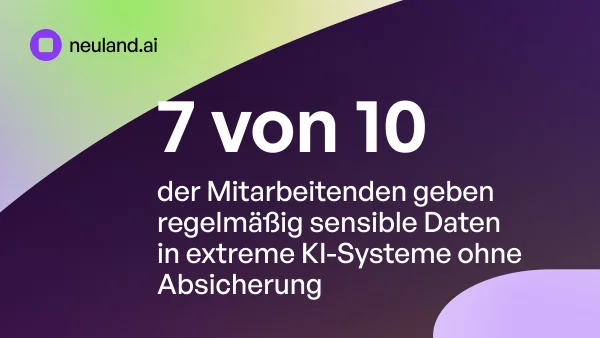

68% of employees use AI systems in unauthorized ways

7 out of 10 use GenAI in a professional context without the employer's knowledge

57% regularly enter sensitive data into external AI services

From opportunity to risk

The parallels to "Shadow IT" are obvious. What initially enables productivity and innovation leads to significant dangers when used uncontrolled: data protection violations, loss of trade secrets, compliance problems, and a complete loss of control over company-relevant information.

The new phenomenon: Bring Your Own AI (BYOAI)

Closely linked to Shadow AI is the trend "Bring Your Own AI" (BYOAI). Employees use private or freely available AI tools for professional tasks – often out of convenience, time pressure, or due to a lack of official corporate solutions.

The risks

Companies have no control over what data is entered. Private accounts are not subject to the security and compliance standards of the company. Data can be stored on foreign servers or used for training purposes. BYOAI is therefore not only a comfort solution for employees but in many cases the direct path through which Shadow AI emerges within the company.

Warnings from business and research

As early as mid-2024, the international consulting firm Gartner classified "Shadow AI" as one of the five biggest organizational risks in digital transformation – on par with issues such as cybersecurity and regulatory non-compliance.

Gartner specifically warned about:

Uncontrolled data flow: Business and customer data end up in external environments, often without the possibility of deletion. Loss of traceability: AI-based decisions and content are neither documented nor verifiable. Legal risks: Violations of data protection laws such as the GDPR can result in high fines.

McKinsey and PwC deepened the topic with practical case studies:

A manufacturing SME lost confidential production plans after an employee entered them into a public AI tool. Parts of the data later appeared in training contexts of other providers. An international consulting firm used unverified AI-generated figures in client reports – the correction and reputation management cost over 1.2 million euros. An authority uploaded internal documents for application procedures into a publicly accessible AI system; data protection violations led to protracted proceedings and a loss of trust. These examples illustrate: Shadow AI is not a theoretical risk but a lived reality – with follow-up costs that can quickly reach millions.

Why this topic needs to be addressed now

Modern AI marks a historical turning point. The economic potential for Germany is estimated at up to 330 billion euros annually (IW Köln). However, without clear internal guidelines, secure platforms, and transparent processes, this progress threatens to become a danger to companies, authorities, and the economy as a whole. Germany is at the steam engine moment of the digital era. Artificial intelligence can create a new economic miracle. But currently, millions of employees are using AI tools like ChatGPT without their employer's knowledge, even with sensitive data. Shadow AI and the trend of ‘Bring Your Own AI’ jeopardize data protection, compliance, and the core of corporate value creation. If we want to leverage the opportunities of AI, we must now integrate it securely, transparently, and data-souverainly into corporate daily life.

Government advises companies on what they should do now:

1. Formulate usage guidelines: Define which AI tools are allowed and how to handle sensitive data.

2. Create secure AI accesses: Introduce authorized platforms that ensure data protection and compliance.

3. Raise awareness: Train employees and inform them about risks.

4. Establish monitoring: Monitor the use of external AI tools to prevent misuse.

From danger to opportunity

Shadow AI and BYOAI are not fringe issues for the IT department – they concern the entire organization. The unauthorized use of private or freely available AI tools may seem convenient in the short term but can lead to serious data protection, compliance, and security problems over the long term.

However, those who now define clear rules, create secure and authorized AI accesses, and sharpen employee awareness can minimize risks and fully exploit the benefits of generative AI. The foundation for this is a secure, reliable, and scalable AI platform like the neuland.ai HUB. Thus, an immediate danger becomes a sustainable opportunity for innovation, productivity enhancement, and competitive advantages – without losing control and trustworthiness.